Everything else is just a layer on top.

From language models to image generation, speech recognition, and translation — the same core architecture powers it all. Understanding the Transformer isn’t just academic curiosity; it’s the key to understanding the AI revolution.

GPT Literally Tells the Story

The name itself is a roadmap:

- Generative → Models create, not retrieve

- Pretrained → They learn from massive data before specialization

- Transformer → The breakthrough that changed the game

What Makes the Transformer Special?

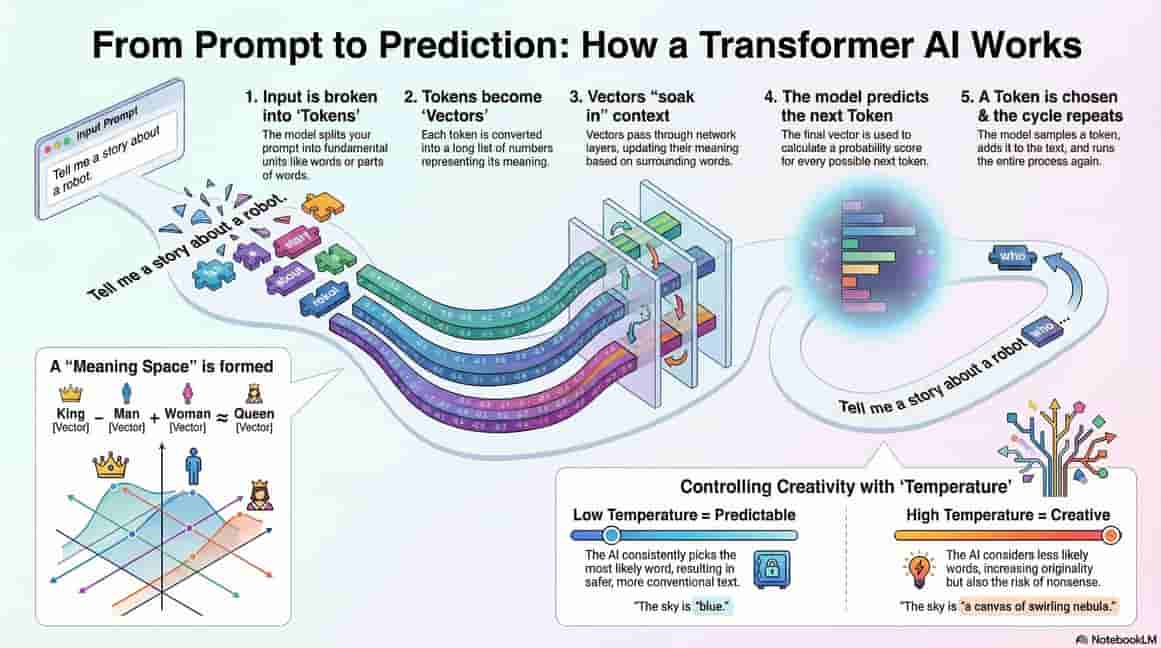

🧠 Attention is the Secret Sauce

Instead of reading words one by one, tokens learn to pay attention to each other. Meaning isn’t isolated — it’s contextual.

When you read “The bank was steep,” your brain instantly knows we’re talking about a riverbank, not a financial institution. Transformers do the same thing through attention mechanisms.

🔁 Layers That Refine Understanding

The architecture is elegantly simple:

- Attention layers let tokens communicate with each other

- MLP layers refine meaning in parallel

Under the hood, it’s just matrix math — scaled to billions of parameters. But that scale transforms simple operations into something that resembles understanding.

🎯 Generation is a Simple Loop

The magic of text generation is surprisingly straightforward:

- Predict the next token

- Sample it based on probability

- Append to the sequence

- Repeat

At scale, this turns probabilities into reasoning, creativity, and structure.

🎛️ Temperature Controls Personality

Ever wondered why AI responses can feel different?

- Lower temperature → Precise, predictable outputs

- Higher temperature → Creative, exploratory responses

It’s just a dial on the probability distribution, but it dramatically changes the output character.

The Bottom Line

What looks like intelligence is really structure + scale + repetition done extremely well.

Once you get this, modern AI stops feeling magical — and starts feeling buildable.

The Transformer isn’t just an architecture. It’s a lens through which the entire field of AI becomes comprehensible.

Want to explore AI-powered features? Check out OpenDots — where we’re building community connections powered by intelligent recommendations.