If your AI agent still uses direct function calls, it’s quietly burning tokens, money, and reliability.

The real upgrade isn’t smarter prompts — it’s a better execution model.

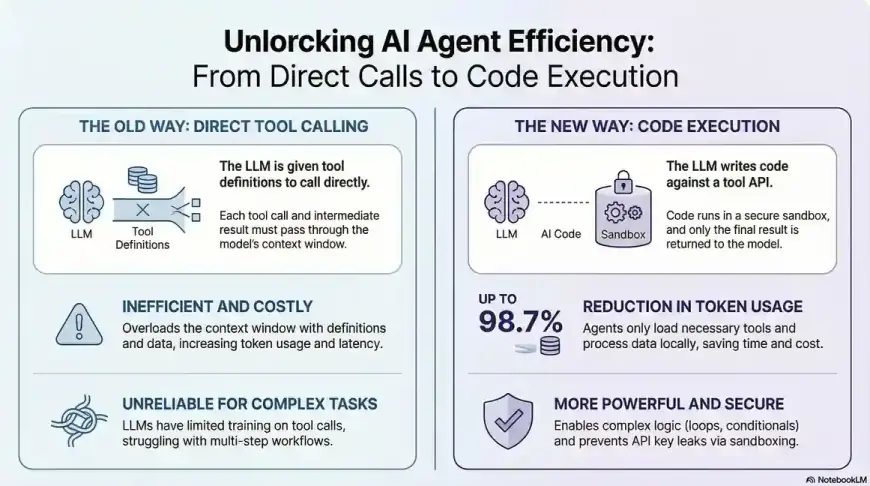

The Hidden Tax of Traditional Tool Calling

Most agent setups today treat LLMs like glorified tool routers:

- Every tool schema bloats the context window

- Every intermediate result flows back through the model

- Latency grows, costs spike, and complex workflows become fragile

This is the hidden tax of traditional MCP tool calling. And it’s costing you more than you realize.

The Shift: Code Mode

Instead of forcing LLMs into unnatural tool syntax, we let them do what they’ve already mastered: write real code.

Here’s why that matters:

🧠 Play to Native Strengths

LLMs have absorbed millions of lines of real-world code. Writing TypeScript against an API is far more reliable than schema-driven tool calls.

The model isn’t guessing at parameter formats or wrestling with JSON schemas. It’s doing what it was trained to do — write code that works.

📉 Context Efficiency at Scale

Agents can loop, branch, and process massive datasets inside a sandbox — then return only the final answer.

Token usage drops by 90–98%.

Instead of ping-ponging results through the model for every operation, the code runs to completion and surfaces just what matters.

⚡ Execution Beats Orchestration

Code runs inside lightweight V8 Isolates that spin up in milliseconds. Fast, disposable, and isolated by default.

No container cold starts. No heavyweight runtimes. Just instant execution.

🔐 Security Without Compromises

Secrets live in secure bindings. No internet access. No key exposure. No accidental leaks.

The sandbox is locked down by design. Your API keys never touch the model’s context.

A Mindset Shift

This isn’t just an optimization. It’s a fundamental change in how we think about agents.

Stop designing agents like prompt-driven automations.

Start designing them like autonomous, secure software engineers.

The agent doesn’t need to understand every tool’s schema. It needs to understand the problem and write code that solves it.

The Future of Agents

The future of agents won’t be won by clever prompts — it’ll be won by:

- Reliable execution over brittle tool chains

- Clean control flow over nested function calls

- Sane architecture over prompt engineering hacks

The teams building the most capable agents aren’t optimizing prompts. They’re building execution environments that let LLMs do what they do best.

Building AI-powered features? Check out OpenDots — where we’re using these principles to create intelligent community connections.